Cyclomatic Complexity is one of those programmer geek things that I concern myself with that I’ve found many other developers don’t care about, or perhaps aren’t even aware of.

For years I’ve been using SourceMonitor from Campwood Software to keep an eye on the various projects I work on, and gauge where the code could use some TLC.

The formal definition of cyclomatic complexity is rather esoteric, but simply put it can be looked at as a way of measuring how ‘messy’ your source code is. The messiness of the code is a decent indicator of how difficult the code is to maintain, how fragile it is, and how likely you are to break it when you make a change.

A commonly accepted ideal limit to complexity in a given chunk of code is 10. That is, code that has a measured complexity of 10 or less is easy to understand, maintain, debug and modify. In my own experience, a complexity value of up to 20 is pretty workable (and sometimes unavoidable). As long as the code is consistently formatted, has good naming conventions, and is clearly documented with comments, you can live with it.

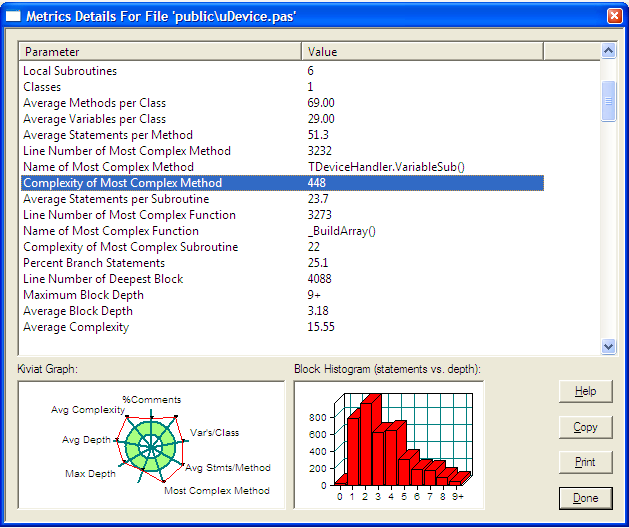

When I started my current job almost three years ago, we inherited a large, organic code base. To my surprise, the average cyclomatic complexity was quite low, just over 3. To my much greater surprise, the most complex method was 448!

Three years ago I was new to this code base, and had larger concerns. I looked at the routine in question, but since I didn’t understand what it was doing, or its importance in the system, I left it alone, but kept it in the back of my mind.

A couple of months ago I had a task that involved modifying this highly complex routine, so I took the time to really go through it and understand what it was doing. I found that it was actually something fairly simple – looking through text for variable tokens and replacing them with real values – but it was doing a whole heck of a lot of it. Hence the complexity number.

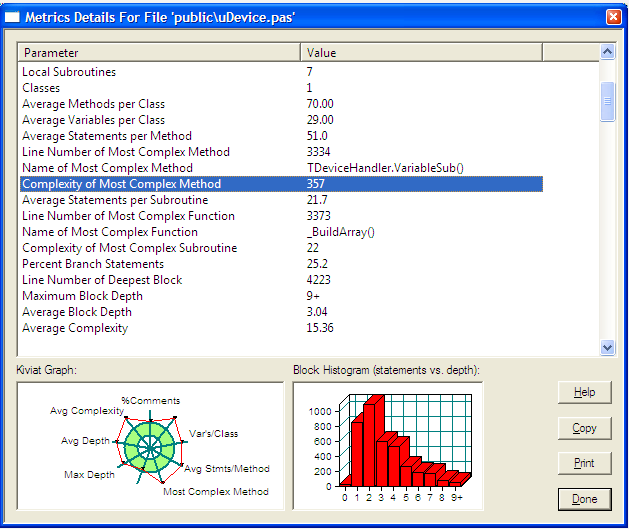

I also found that it was doing some text formatting, which is a different sort of operation. Aha! Here was an opportunity to perform an Extract Method refactoring. The result was a significant reduction in the complexity value, from 448 down to 357:

The newly extracted method had a complexity of 90, which is about what you’d expect – the complexity isn’t removed from the the code, after all. Instead it’s broken up into smaller, less complex pieces.

Now, 357 (and 90) are still very high cyclomatic complexity values, given that anything over 20 or so is considered dangerously complex and fragile. But in the case of these routines, the complexity value is misleading. The routines are just long series of “if the text says this, change it to that” operations. They’ve got a huge number of such choices, but in actual practice the code is easy to read, understand and modify.

Lesson: cyclomatic complexity is a metric worth keeping tabs on, but a bad CC value doesn’t always mean bad code.